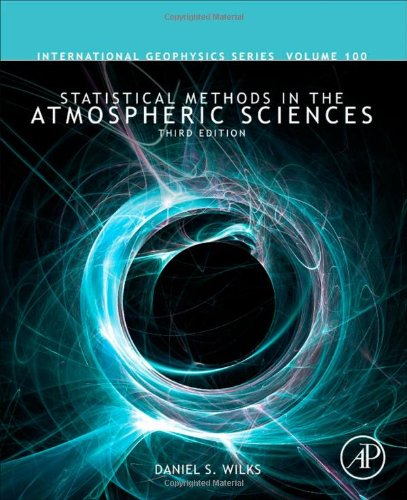

Statistical Methods in the Atmospheric Sciences 3rd Edition by Daniel Wilks ISBN 978-0123850225 0123850223

$50.00 Original price was: $50.00.$35.00Current price is: $35.00.

Statistical Methods in the Atmospheric Sciences 3rd Edition by Daniel S. Wilks – Ebook PDF Instant Download/Delivery: 978-0123850225, 0123850223

Full download Statistical Methods in the Atmospheric Sciences 3rd Edition after payment

Product details:

ISBN 10: 0123850223

ISBN 13: 978-0123850225

Author: Daniel S. Wilks

Statistical Methods in the Atmospheric Sciences, Third Edition, explains the latest statistical methods used to describe, analyze, test, and forecast atmospheric data. This revised and expanded text is intended to help students understand and communicate what their data sets have to say, or to make sense of the scientific literature in meteorology, climatology, and related disciplines.

In this new edition, what was a single chapter on multivariate statistics has been expanded to a full six chapters on this important topic. Other chapters have also been revised and cover exploratory data analysis, probability distributions, hypothesis testing, statistical weather forecasting, forecast verification, and time series analysis. There is now an expanded treatment of resampling tests and key analysis techniques, an updated discussion on ensemble forecasting, and a detailed chapter on forecast verification. In addition, the book includes new sections on maximum likelihood and on statistical simulation and contains current references to original research. Students will benefit from pedagogical features including worked examples, end-of-chapter exercises with separate solutions, and numerous illustrations and equations.

This book will be of interest to researchers and students in the atmospheric sciences, including meteorology, climatology, and other geophysical disciplines.

Accessible presentation and explanation of techniques for atmospheric data summarization, analysis, testing and forecasting

Many worked examples

End-of-chapter exercises, with answers provided

Table of contents:

Part I

Preliminaries

1. Introduction

1.1. What Is Statistics?

1.2. Descriptive and Inferential Statistics

1.3. Uncertainty about the Atmosphere

2. Review of Probability

2.1. Background

2.2. The Elements of Probability

2.2.1. Events

2.2.2. The Sample Space

2.2.3. The Axioms of Probability

2.3. The Meaning of Probability

2.3.1. Frequency Interpretation

2.3.2. Bayesian (Subjective) Interpretation

2.4. Some Properties of Probability

2.4.1. Domain, Subsets, Complements, and Unions

2.4.2. DeMorgan’s Laws

2.4.3. Conditional Probability

2.4.4. Independence

2.4.5. Law of Total Probability

2.4.6. Bayes’ Theorem

2.5. Exercises

Part II

Univariate Statistics

3. Empirical Distributions and Exploratory Data Analysis

3.1. Background

3.1.1. Robustness and Resistance

3.1.2. Quantiles

3.2. Numerical Summary Measures

3.2.1. Location

3.2.2. Spread

3.2.3. Symmetry

3.3. Graphical Summary Devices

3.3.1. Stem-and-Leaf Display

3.3.2. Boxplots

3.3.3. Schematic Plots

3.3.4. Other Boxplot Variants

3.3.5. Histograms

3.3.6. Kernel Density Smoothing

3.3.7. Cumulative Frequency Distributions

3.4. Reexpression

3.4.1. Power Transformations

3.4.2. Standardized Anomalies

3.5. Exploratory Techniques for Paired Data

3.5.1. Scatterplots

3.5.2. Pearson (Ordinary) Correlation

3.5.3. Spearman Rank Correlation and Kendall’s r

3.5.4. Serial Correlation

3.5.5. Autocorrelation Function

3.6. Exploratory Techniques for Higher-Dimensional Data

3.6.1. The Star Plot

3.6.2. The Glyph Scatterplot

3.6.3. The Rotating Scatterplot

3.6.4. The Correlation Matrix

3.6.5. The Scatterplot Matrix

3.6.6. Correlation Maps

3.7. Exercises

4. Parametric Probability Distributions

4.1. Background

4.1.1. Parametric versus Empirical Distributions

4.1.2. What Is a Parametric Distribution?

4.1.3. Parameters versus Statistics

4.1.4. Discrete versus Continuous Distributions

4.2. Discrete Distributions

4.2.1. Binomial Distribution

4.2.2. Geometric Distribution

4.2.3. Negative Binomial Distribution

4.2.4. Poisson Distribution

4.3. Statistical Expectations

4.3.1. Expected Value of a Random Variable

4.3.2. Expected Value of a Function of a Random Variable

4.4. Continuous Distributions

4.4.1. Distribution Functions and Expected Values

4.4.2. Gaussian Distributions

4.4.3. Gamma Distributions

4.4.4. Beta Distributions

4.4.5. Extreme-Value Distributions

4.4.6. Mixture Distributions

4.5. Qualitative Assessments of the Goodness of Fit

4.5.1. Superposition of a Fitted Parametric Distribution and Data Histogram

4.5.2. Quantile-Quantile (Q-Q) Plots

4.6. Parameter Fitting Using Maximum Likelihood

4.6.1. The Likelihood Function

4.6.2. The Newton-Raphson Method

4.6.3. The EM Algorithm

4.6.4. Sampling Distribution of Maximum-Likelihood Estimates

4.7. Statistical Simulation

4.7.1. Uniform Random-Number Generators

4.7.2. Nonuniform Random-Number Generation by Inversion

4.7.3. Nonuniform Random-Number Generation by Rejection

4.7.4. Box-Muller Method for Gaussian Random-Number Generation

4.7.5. Simulating from Mixture Distributions and Kernel Density Estimates

4.8. Exercises

5. Frequentist Statistical Inference

5.1. Background

5.1.1. Parametric versus Nonparametric Inference

5.1.2. The Sampling Distribution

5.1.3. The Elements of Any Hypothesis Test

5.1.4. Test Levels and p Values

5.1.5. Error Types and the Power of a Test

5.1.6. One-Sided versus Two-Sided Tests

5.1.7. Confidence Intervals: Inverting Hypothesis Tests

5.2. Some Commonly Encountered Parametric Tests

5.2.1. One-Sample & Test

5.2.2. Tests for Differences of Mean under Independence

5.2.3. Tests for Differences of Mean for Paired Samples

5.2.4. Tests for Differences of Mean under Serial Dependence

5.2.5. Goodness-of-Fit Tests

5.2.6. Likelihood Ratio Tests

5.3. Nonparametric Tests

5.3.1. Classical Nonparametric Tests for Location

5.3.2. Mann-Kendall Trend Test

5.3.3. Introduction to Resampling Tests

5.3.4. Permutation Tests

5.3.5. The Bootstrap

5.4. Multiplicity and “Field Significance”

5.4.1. The Multiplicity Problem for Independent Tests

5.4.2. Field Significance and the False Discovery Rate

5.4.3. Field Significance and Spatial Correlation

5.5. Exercises

6. Bayesian Inference

6.1. Background

6.2. The Structure of Bayesian Inference

6.2.1. Bayes’ Theorem for Continuous Variables

6.2.2. Inference and the Posterior Distribution

6.2.3. The Role of the Prior Distribution

6.2.4. The Predictive Distribution

6.3. Conjugate Distributions

6.3.1. Definition of Conjugate Distributions

6.3.2. Binomial Data-Generating Process

6.3.3. Poisson Data-Generating Process

6.3.4. Gaussian Data-Generating Process

6.4. Dealing with Difficult Integrals

6.4.1. Markov Chain Monte Carlo (MCMC) Methods

6.4.2. The Metropolis-Hastings Algorithm

6.4.3. The Gibbs Sampler

6.5. Exercises

7. Statistical Forecasting

7.1. Background

7.2. Linear Regression

7.2.1. Simple Linear Regression

7.2.2. Distribution of the Residuals

7.2.3. The Analysis of Variance Table

7.2.4. Goodness-of-Fit Measures

7.2.5. Sampling Distributions of the Regression Coefficients

7.2.6. Examining Residuals

7.2.7. Prediction Intervals

7.2.8. Multiple Linear Regression

7.2.9. Derived Predictor Variables in Multiple Regression

7.3. Nonlinear Regression

7.3.1. Generalized Linear Models

7.3.2. Logistic Regression

7.3.3. Poisson Regression

7.4. Predictor Selection

7.4.1. Why Is Careful Predictor Selection Important?

7.4.2. Screening Predictors

7.4.3. Stopping Rules

7.4.4. Cross Validation

7.5. Objective Forecasts Using Traditional Statistical Methods

7.5.1. Classical Statistical Forecasting

7.5.2. Perfect Prog and MOS

7.5.3. Operational MOS Forecasts

7.6. Ensemble Forecasting

7.6.1. Probabilistic Field Forecasts

7.6.2. Stochastic Dynamical Systems in Phase Space

7.6.3. Ensemble Forecasts

7.6.4. Choosing Initial Ensemble Members

7.6.5. Ensemble Average and Ensemble Dispersion

7.6.6. Graphical Display of Ensemble Forecast Information

7.6.7. Effects of Model Errors

7.7. Ensemble MOS

7.7.1. Why Ensembles Need Postprocessing

7.7.2. Regression Methods

7.7.3. Kernel Density (Ensemble “Dressing”) Methods

7.8. Subjective Probability Forecasts

7.8.1. The Nature of Subjective Forecasts

7.8.2. The Subjective Distribution

7.8.3. Central Credible Interval Forecasts

7.8.4. Assessing Discrete Probabilities

7.8.5. Assessing Continuous Distributions

7.9. Exercises

8. Forecast Verification

8.1. Background

8.1.1. Purposes of Forecast Verification

8.1.2. The Joint Distribution of Forecasts and Observations

8.1.3. Scalar Attributes of Forecast Performance

8.1.4. Forecast Skill

8.2. Nonprobabilistic Forecasts for Discrete Predictands

8.2.1. The 2 x 2 Contingency Table

8.2.2. Scalar Attributes of the 2 x 2 Contingency Table

8.2.3. Skill Scores for 2 x 2 Contingency Tables

8.2.4. Which Score?

8.2.5. Conversion of Probabilistic to Nonprobabilistic Forecasts

8.2.6. Extensions for Multicategory Discrete Predictands

8.3. Nonprobabilistic Forecasts for Continuous Predictands

8.3.1. Conditional Quantile Plots

8.3.2. Scalar Accuracy Measures

8.3.3. Skill Scores

8.4. Probability Forecasts for Discrete Predictands

8.4.1. The Joint Distribution for Dichotomous Events

8.4.2. The Brier Score

8.4.3. Algebraic Decomposition of the Brier Score

8.4.4. The Reliability Diagram

8.4.5. The Discrimination Diagram

8.4.6. The Logarithmic, or Ignorance Score

8.4.7. The ROC Diagram

8.4.8. Hedging, and Strictly Proper Scoring Rules

8.4.9. Probability Forecasts for Multiple-Category Events

8.5. Probability Forecasts for Continuous Predictands

8.5.1. Full Continuous Forecast Probability Distributions

8.5.2. Central Credible Interval Forecasts

8.6. Nonprobabilistic Forecasts for Fields

8.6.1. General Considerations for Field Forecasts

8.6.2. The 51 Score

8.6.3. Mean Squared Error

8.6.4. Anomaly Correlation

8.6.5. Field Verification Based on Spatial Structure

8.7. Verification of Ensemble Forecasts

8.7.1. Characteristics of a Good Ensemble Forecast

8.7.2. The Verification Rank Histogram

8.7.3. Minimum Spanning Tree (MST) Histogram

8.7.4. Shadowing, and Bounding Boxes

8.8. Verification Based on Economic Value

8.8.1. Optimal Decision Making and the Cost/Loss Ratio Problem

8.8.2. The Value Score

8.8.3. Connections with Other Verification Approaches

8.9. Verification When the Observation is Uncertain

8.10. Sampling and Inference for Verification Statistics

8.10.1. Sampling Characteristics of Contingency Table Statistics

8.10.2. ROC Diagram Sampling Characteristics

8.10.3. Brier Score and Brier Skill Score Inference

8.10.4. Reliability Diagram Sampling Characteristics

8.10.5. Resampling Verification Statistics

8.11. Exercises

9. Time Series

9.1. Background

9.1.1. Stationarity

9.1.2. Time-Series Models

9.1.3. Time-Domain versus Frequency-Domain Approaches

9.2. Time Domain-1. Discrete Data

9.2.1. Markov Chains

9.2.2. Two-State, First-Order Markov Chains

9.2.3. Test for Independence versus First-Order Serial Dependence

9.2.4. Some Applications of Two-State Markov Chains

9.2.5. Multiple-State Markov Chains

9.2.6. Higher-Order Markov Chains

9.2.7. Deciding among Alternative Orders of Markov Chains

9.3. Time Domain-II. Continuous Data

9.3.1. First-Order Autoregression

9.3.2. Higher-Order Autoregressions

9.3.3. The AR(2) Model

9.3.4. Order Selection Criteria

9.3.5. The Variance of a Time Average

9.3.6. Autoregressive-Moving Average Models

9.3.7. Simulation and Forecasting with Continuous Time-Domain Models

9.4. Frequency Domain-1. Harmonic Analysis

9.4.1. Cosine and Sine Functions

9.4.2. Representing a Simple Time Series with a Harmonic Function

9.4.3. Estimation of the Amplitude and Phase of a Single Harmonic

9.4.4. Higher Harmonics

9.5. Frequency Domain-II. Spectral Analysis

9.5.1. The Harmonic Functions as Uncorrelated Regression Predictors

9.5.2. The Periodogram, or Fourier Line Spectrum

13.4. Maximum Covariance Analysis (MCA)

13.5. Exercises

14. Discrimination and Classification

14.1. Discrimination versus Classification

14.2. Separating Two Populations

14.2.1. Equal Covariance Structure: Fisher’s Linear Discriminant

14.2.2. Fisher’s Linear Discriminant for Multivariate Normal Data

14.2.3. Minimizing Expected Cost of Misclassification

14.2.4. Unequal Covariances: Quadratic Discrimination

14.3. Multiple Discriminant Analysis (MDA)

14.3.1. Fisher’s Procedure for More Than Two Groups

14.3.2. Minimizing Expected Cost of Misclassification

14.3.3. Probabilistic Classification

14.4. Forecasting with Discriminant Analysis

14.5. Alternatives to Classical Discriminant Analysis

14.5.1. Discrimination and Classification Using Logistic Regression

14.5.2. Discrimination and Classification Using Kemel Density Estimates

14.6. Exercises

15. Cluster Analysis

15.1. Background

15.1.1. Cluster Analysis versus Discriminant Analysis

15.1.2. Distance Measures and the Distance Matrix

15.2. Hierarchical Clustering

15.2.1. Agglomerative Methods Using the Distance Matrix

15.2.2. Ward’s Minimum Variance Method

15.2.3. The Dendrogram, or Tree Diagram

15.2.4. How Many Clusters?

15.2.5. Divisive Methods

15.3. Nonhierarchical Clustering

15.3.1. The K-Means Method

15.3.2. Nucleated Agglomerative Clustering

15.3.3. Clustering Using Mixture Distributions

15.4. Exercises

People also search for:

statistical methods

elementary statistical methods

types of statistical methods

advanced statistical methods

computer oriented statistical methods

Tags: Daniel Wilks, Statistical Methods, Atmospheric Sciences